RNN White Box

- Resources for learning RNN.

- Backpropagation Through Time in detail.

LSTMs and GRUs, variants of vanilla RNNs, have proven extremely effective in Computer Vision and Natural Language Processing applications. Of all the excellent resources on RNNs available online, following are the ones that helped me understand them clearly:

-

Understanding LSTM Networks: Clear explanation with block diagrams.

-

Recurrent Neural Networks Tutorial, Part 1 to Part 3: Tutorial in Theano with a touch of BPTT and vanishing/exploding gradient problem.

-

The Unreasonable Effectiveness of Recurrent Neural Networks: Types of sequence problems and fun applications in NLP.

-

Awesome Recurrent Neural Networks: A curated list of RNN resources.

Backpropagation Through Time

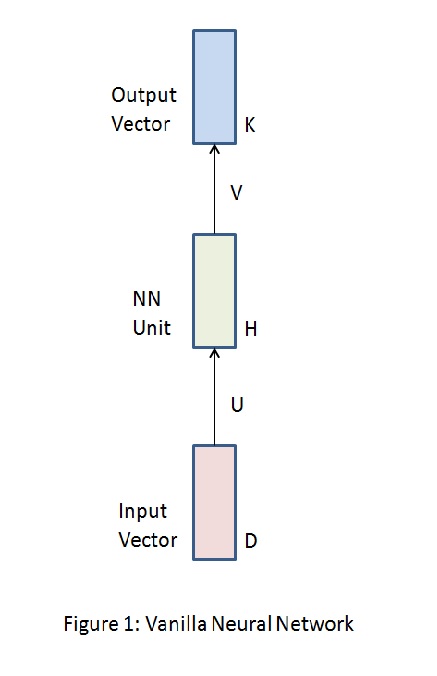

$Figure\;1$ shows a vanilla neural network architecture. $D$ dimensional input vector is connected to the neural network unit through weights $U$. The neural network unit is in turn connected to the $K$ dimensional output vector through weights $V$. The neural network unit consists of a single layer of $H$ neurons with $tanh$ activation. Gradients for optimization of this network can be computed using standard back propagation.

RNNs, on the other hand, allow mapping between variable length input sequences to variable length output sequences. Thus, gradients need to be back propagated in time to update weights. Depending on the type of application, RNN sequences can be broadly categorized (Refer Andrej Karpathy’s blog) into:

-

Synchronised input and output sequences

-

Unsynchronised input and output sequences

-

Input sequences

-

Output sequences

Synchronized input and output sequences

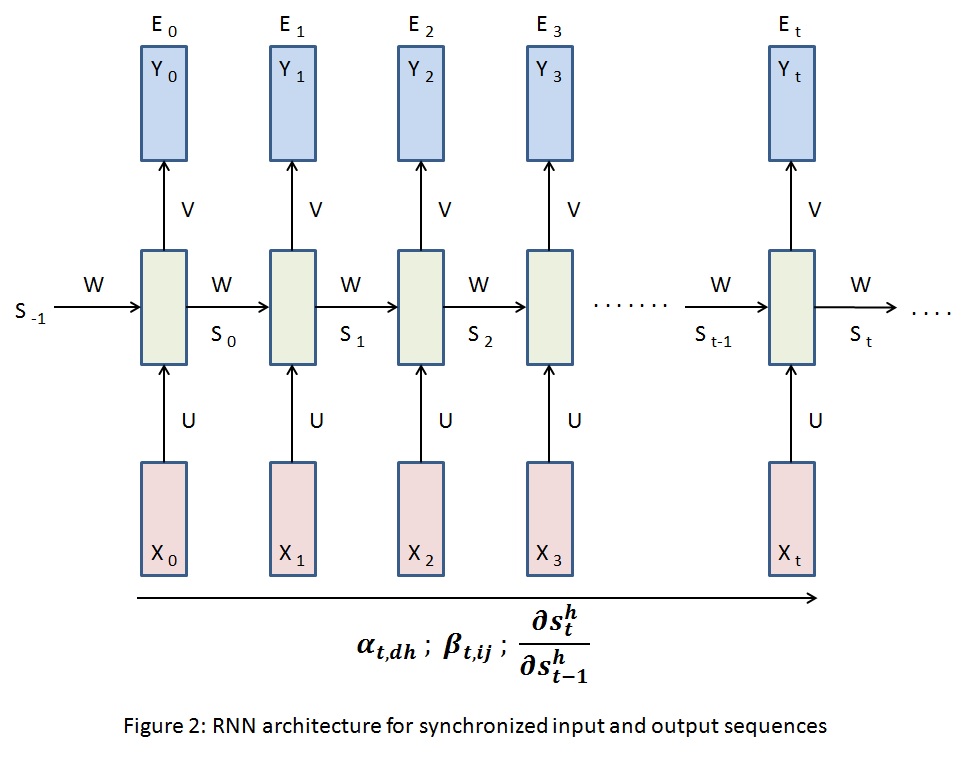

Extending vanilla neural network architecture from $Figure\;1$, $Figure\;2$ shows a sequence of neural network units mapping the input sequences $X_{i}$s to output sequences $Y_{i}$s. Such architecture finds place in frame level video classification where the prediction depends on the current frame as well as the frames that appeared before it. $S_{i}$s are the $H$ dimensional output of the hidden layer in the neural network units. These are the memory of the network which transfer previous state information along the chain. The neural network unit at $t+1$ takes input from $X_{t}$ throught $U$ and $S_{t}$ through $W$. Weights $U$, $V$ and $W$ are shared across RNN units. $S_{-1}$ is initialized to a vector of zeros.

The total loss for the above RNN is:

where $T$ is the length of input and output sequences. $\frac{\partial E}{\partial v_{hk}}$, $\frac{\partial E}{\partial u_{dh}}$, $\frac{\partial E}{\partial w_{ij}}$ are the target gradients that need to be computed.

For RNN unit at $t$, compute the following:

Finally,

where,

and are messages passed along the RNN as shown in $Figure\;2$.

Unsynchronized input and output sequences

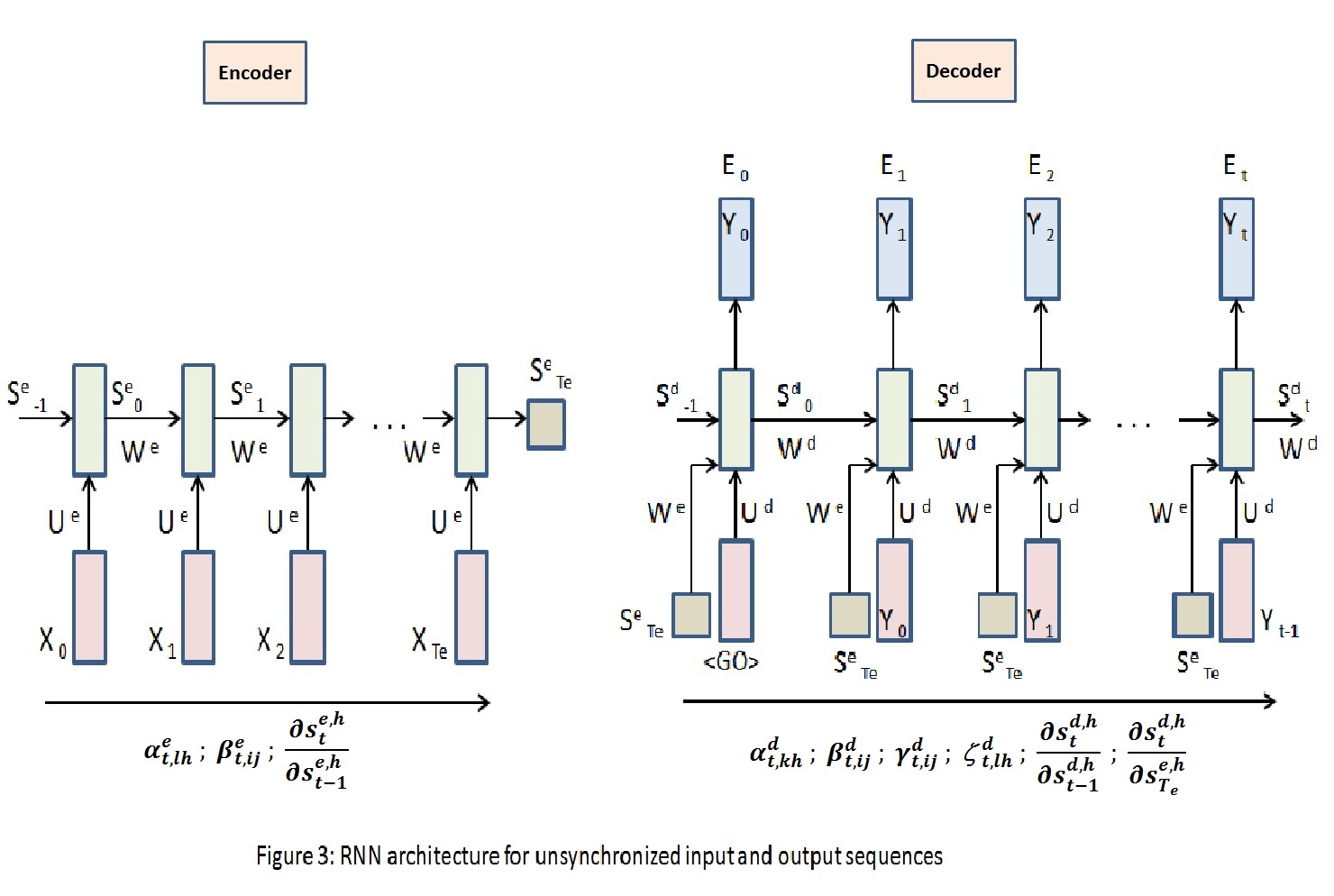

RNNs are pretty successful in machine translation applications. The architecture in $Figure\;3$ shows an encoder RNN connected to a decoder RNN through the encoder’s hidden state $S^e_{Te}$, where $Te$ is length of the input sequence to the encoder. The RNN decoder unit at $t+1$ takes $S^e_{Te}$, $Y_{t}$ and $S^d_{t}$ as inputs. In this architecture, a variable length input sequence can be mapped to a variable length output sequence. Generally, encoders and decoders use different sets of parameters as shown. Each neural network unit in the encoder and the decoder consist of a single hidden layer of $H$ neurons.

The total loss is given by equation $(\ref{1})$ and the target gradients that need to be computed are $\frac{\partial E}{\partial v^d_{hk}}$, $\frac{\partial E}{\partial u^d_{kh}}$, $\frac{\partial E}{\partial w^d_{ij}}$, $\frac{\partial E}{\partial u^e_{lh}}$ and $\frac{\partial E}{\partial w^e_{ij}}$, where superscripts $e$ and $d$ represent parameters for encoders and decoders respectively.

For encoder compute:

For decoder compute:

Hence,

where,

and are messages passed across the encoder. These are then accumulated and passed across the decoder through and . Information in the form of and also flow through the decoder for gradient computation.

Input sequences

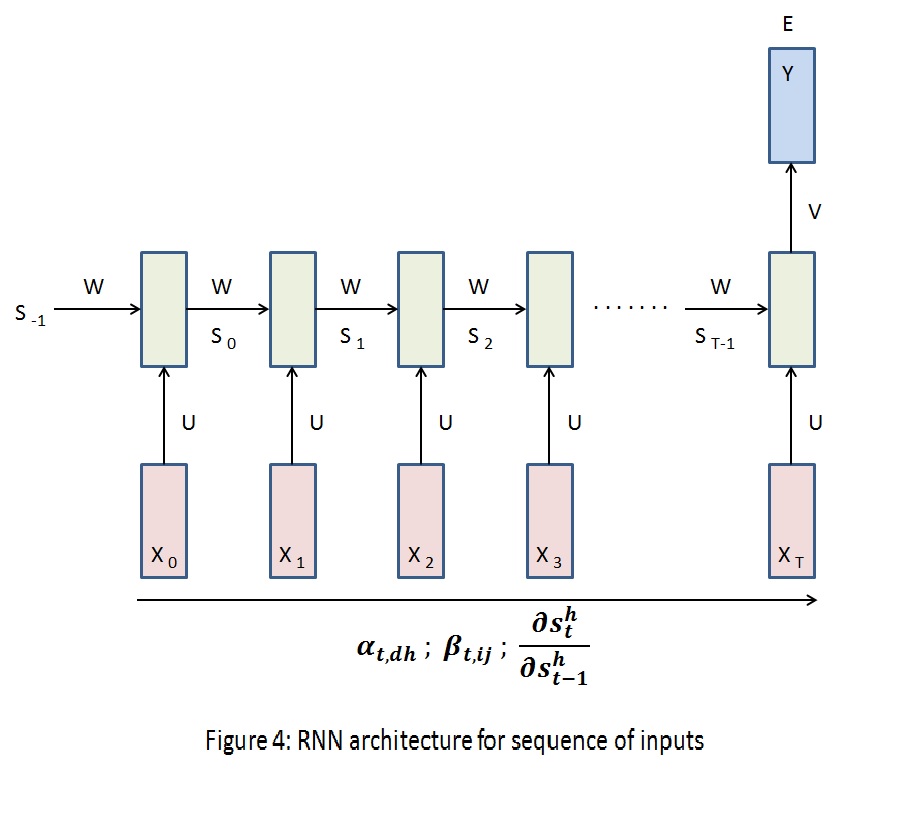

In sentiment analysis, a sequence of words is classified into positive or negative sentiments. $Figure\;4$ shows the architecture for such applications.

Gradient computations are very straight forward in this case.

And,

where and are given by equations $(\ref{4})$ and $(\ref{5})$ respectively.

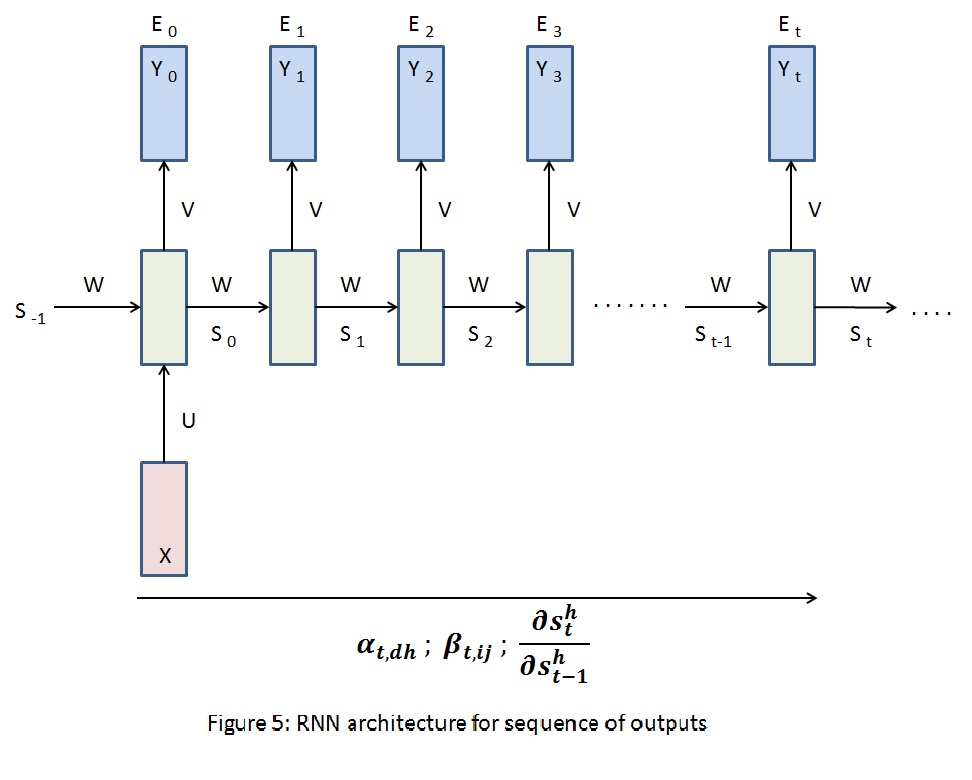

Output sequences

Image captioning takes an image and outputs a sequence of words, RNN architecture for the same is shown in $Figure\;5$. Refer equations $(\ref{2})$, $(\ref{3})$, $(\ref{5})$ for $\frac{\partial E_{t}}{\partial y^k_{t}}$, $\frac{\partial E_{t}}{\partial s^h_{t}}$ and $\beta_{t,ij}$ respectively. Target gradients $\frac{\partial E}{\partial v_{hk}}$, $\frac{\partial E}{\partial u_{dh}}$, $\frac{\partial E}{\partial w_{ij}}$ can be computed using $(\ref{6})$, $(\ref{7})$ and $(\ref{8})$. $\alpha_{t,dh}$, however, is computed as below:

where,

Conclusion

We have seen how the chain rule of calculus can be used to compute gradients in RNNs for various applications. The process can be viewed as passing messages through RNNs across time. Optimize weights using HF optimization to avoid ‘vanishing gradinets’ problem. Gradient computations for LSTMs, GRUs and other RNN variants require a similar approach.